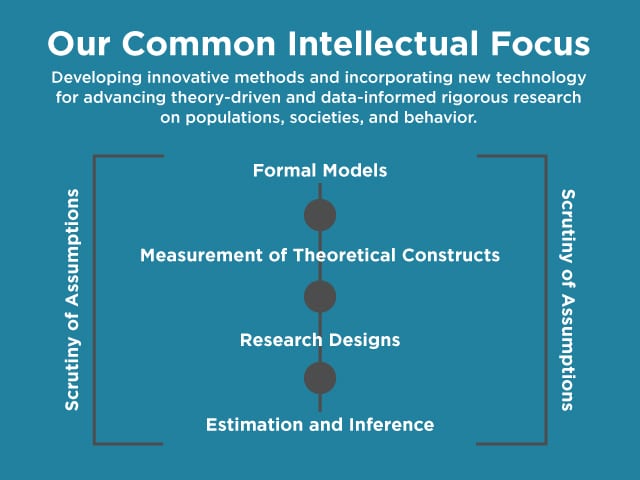

This is an interdisciplinary community of faculty and students interested in methodological research in relation to applications in social, behavioral, and health sciences.

We bring together:

- a collection of programs in quantitative research methodology

- Faculty members seek to develop and apply advanced methods for research in social and behavioral sciences

- promote innovative research ideas and tools with broad applications,

- conduct high-quality workshop series

- offer a wide range of courses on research methods

- provide excellent opportunities for research and teaching apprenticeships for talented students

Our goals are to create an intellectual niche, exchange research ideas, facilitate research collaborations, share teaching resources, enhance the training of students, and generate a collective impact on the University of Chicago campus and beyond.

play_circle_outline Watch our video to learn more.

The Committee is an academic unit that oversees three training programs and organizes a biweekly workshop that serves as an important forum for scholarly exchanges.

The Certificate Program in Advanced Quantitative Methods is designed for doctoral students in the social, behavioral, and health sciences who wish to deepen their methodological training.

The Quantitative Methods and Social Analysis (QMSA) concentration in the MA Program in the Social Sciences (MAPSS) prepares a new generation of scholars to innovate methodologically and to use the theory of statistical inference to tackle challenging problems in a wide range of research.

The Quantitative Social Analysis minor offers undergraduate students at the University of Chicago the opportunity to develop strong statistical foundations for the purpose of learning how to draw valid inferences from quantifiable data and critically evaluate empirical evidence in the social and behavioral sciences.